Pretty printing file-write times

Computational physics solvers often output solution files periodically. CFD codes, for instance, dumps the velocity, pressure, and other field data at regular intervals. More often than not, these codes are run on large clusters with job schedulers that allocate fixed periods of times, called wall-times, to each run. In such a scenario, I find it extremely helpful to know what is the time taken between the file-write events to get a grasp on questions like:

- how many files can I expect during the run?

- when will the next file be written?

- is the remaining wall-time enough for the next file to be written?

Take for example the NekRS solver, which writes its solution files in *0.f0* format.

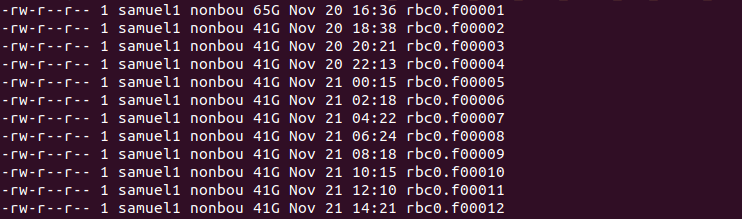

During the course of a run, it will generate a number of these files and listing at the command line produces an output like below:

Now of course, one can look at the time-stamps, calculate the time taken between the files, and extrapolate the times at which future files will be written. I found myself doing this so often that it felt easier to delegate this task to a Python script to interact with the shell and do this calculation for me.

The Python script uses the subprocess module to issue a stat command to the shell and decode the output into a list.

The datetime module is used to parse the time-stamps of the files and perform necessary manipulations.

Furthermore, a feeble attempt is made to handle outliers in the list by using the numpy module to filter out wildly varying time-differences.

This is done by considering values only within three standard deviations from the mean value.

Using the script requires no "installation" as such.

Merely download the Python script file (named ftdPrint), make it an executable, and save it in a location accessible by the $PATH environment variable.

Since my solution files in the example above are of the format rbc0.f0*,

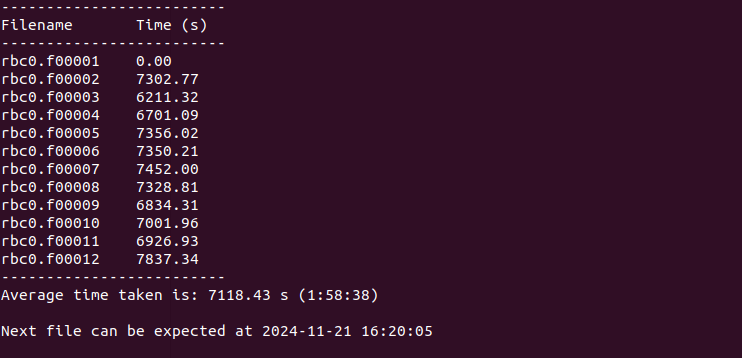

running the command ftdPrint rbc0.f0* gives a neat list like below:

As a cherry on the cake, the script predicts the time at which the next file can be expected, allowing me to judge if the wall-time of the running job will be exceeded before the next file is written. If so, I can kill the job early and save a few corehours. After all, when it comes to high-performance computing, few things are as aggravating as seeing the job being killed just minutes before a solution file dump. As always, the script is available on my GitHub page here.

Happy computing!! :)